Nonlinear Audiosystems Design Tools

Audio programming, nonlinear audio design may 18, 2020

As a game audio designer I am hindered during my creative process, due to the discrepancy between the nonlinearity of my work and the linear character of standard audio production software.

Standard linear audio production tools offer little to none nonlinear sequencing, transitioning, parameter adaption and probability functionalities. Furthermore, linear sequencers are not optimised to provide a visual representation of nonlinear systems. Current standard middleware solutions are more focussed on optimising implementation and less on tackling the obstructions in the design stage of a project. These issues cause testing and prototyping to take unnecessary long and obscures communication with collaborators. This obstructs the workflow and discourages innovation.

Nonlinear Audiosystems Design Tools or NADT, is a range of experiments in the form of prototypes for tools to tackle these issues. By prototyping various tools to address issues caused by the nonlinearity of video games in relation to the linearity of audio production software, I hope to improve the process of designing and testing nonlinear audiosystems. The project has been created as part of my thesis on Tools for Designing Nonlinear Game Audio Systems.

Main issues

The current issues that arise are divisible into 3 general subjects: The inability to quickly test and prototype audio systems with their audio, the lack of overview current standard tools provide (which also hinders communication) and the lack of visual and interactive context.

With an approach that favours quick testing and prototyping, I hope to approach the problem from multiple angles. This should provide a more general sense of what works well and what doesn't, before focussing on specific elements. The main disadvantage of this approach however, is that many interesting details will not (yet) be addressed.

Modular 'flexible' tools

To be able to function as a proof of concept on how to tackle the issues, the tools need to be flexible and can't be specific to any one game or phase in game audio.

'.. the game industry is undergoing constant change .. . The only solution to this unpredictable situation is granularity--that is , the goal to build a flexible set of tools that are constantly able to update, evolve and adapt' - Florian Füsslin, Audio Director at Crytek (Game Audio Programming: Principles and Practices)

Current status

This project is still in progress. So far, I have worked on 3 experiments. All of which have reached their research goals, but can be much further explored in the future.

Click bellow to cycle between the different experiments done so far.

Automatic sound loading and parsing

The first experiment is a Python terminal application, that allows the user to quickly test quantised nonlinear audio systems. The project tackles the disturbance caused by the time and effort spent from creating audio to being able to hear and test it within its nonlinear system (and eventually within the context of the game). Export audio directly into specific folders using specific file and folder names and it will automatically sequence and layer the audio based on the data obtained from the file and folder titles.

The project tackles the disturbance caused by the time and effort spent from creating audio to being able to hear and test it within its nonlinear system (and eventually within the context of the game). Directly loading the audio with given data about transitions and layering in the file and folder names, allows for quicker testing. The video shows this experiment demonstrated on an early musical concept for Rookery, a game currently being developed. Click here for more information on the project.

Quantised synchronisation

I decided to start with quantised grid-based bar synchronisation, based on the following quote from Colin Walder:

'For basic synchronization, I start with bars, beats and grid since it is trivial to prepare the interactive music to support these, and because the are they are the most straightforward to understand in terms of mapping to gameplay.' (Game Audio Programming: Principles and Practices)

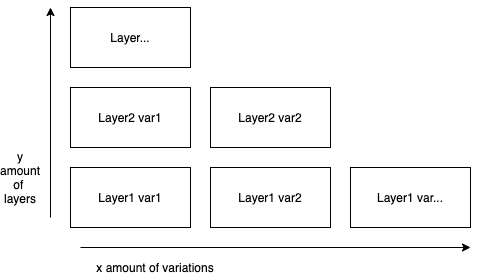

The system to be tested with this tool, is based on layers containing different variations. Audio is sequenced on a given bpm and loop length. For every layer a variation is chosen and played. The data obtained from a filename indicates to what variations a variation within a layer can transition. This means that every layer transitions independent of each other. Because of this, every variation of every layer needs to be loopable over each other.

File loading

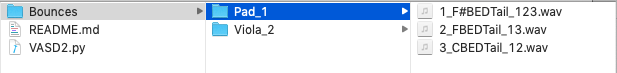

To add a layer, a folder needs to be created in the ‘Bounces’ folder. The title of every folder should end with an underscore followed by the number of the layer. Within every folder, audiofiles containing variation-loops can be put. A variation-loops filename should start with the number of the variation, followed by its title, followed by the numbers of variations it can transition to, all separated by underscores.

Status

Quick testing, by directly bouncing the audio with specific titles, saves time. However, the options are still very limited and file names do need to be named very specifically. User input could be completely avoided by obtaining bpm and the amount of beats from the folder or file titles as well. This system is very simple and can only test a specific type of nonlinear sequencing. However, it has achieved its goal in testing this type of approach.

Check out the project on GitHub for more information

Visual Parameter Adaption

VPA is a prototype tool that uses a visual overview and interface along with the automatic file loading functionality carried over from the previous experiment, to look into upgrading the process of prototyping nonlinear systems and improving (interdisciplinary) communication. Because audio is a nonvisual medium and therefore always is experienced linearly, it can prove difficult to explain or fully understand nonlinear audiosystems design.

Files are loaded from a given folder location and information on layers and transition-possibilities is obtained from them. Layerboxes can be spawned from the Spawner. A layerbox holds a layer with all its variations. When dragged into the sequencer the layerbox is given a X and Y parameter. These parameters can be set to certain values by moving the X and Y sliders. The transitions between variations within layers aren't visualised (yet), but behave in the same way as the previous experiment where the numbers at the end of a filename indicate to what variations a variation can transition. As the current prototype is bound to time restrictions and made to quickly test the most important features, I have created a mockup showcasing some future possibilities. The mockup shows how components can dynamically be loaded in, based on what is useful for the project. This also makes it easier to add components in the future.

As visual context is an important part of game audio, a component to load in visual context has been added. In the future I'd like the program to style the entire interface based on input visuals. I'd also like to add some simple 'template' games, so that the audio can be tested in its interactive context as well. The 'Layer Containers' now show what variation of the layer is being played. As oneshots are another important context to test with looping audio, a component to play sound effects has also been added. In the future I'd like to add sidechaining and randomisation (pitch, gain, etc) functionalities.

Project Rookery

The video shows the project used to test a musical concept for project Rookery. Rookery is a walking simulator set in the Victorian era in London, currently under development. Click here for more information on the project.

Check out the project on GitHub for more information

Procedural audiosystems design and implementation

The latest experiment is a prototype for a framework/tool designed to improve procedural music systems prototyping and implementing. Even though procedural sound design has become somewhat standard in game audio, I find procedural music is still relatively underrepresented. It can be difficult, time consuming and expensive to prototype, test and implement. Because it can be difficult to test, it often is a risk to even attempt procedural music. Procedural music won't work well for every game, but because it is the most dynamic form of nonlinear music, I believe it should be easier to try out. Games such as Mini Metro and Monkey Island have successfully showcased how procedural music can exponentially elevate an entire game.

PAS allows for quick testing and implementing procedural music, using a sample-based sequencer. The automatic file loading functionally has again been carried over from the first experiment. This project shies away from a visual approach, to put more focus on quick implementation and building a general modular framework. PAS's more universal approach and lack of visuals makes it an easy library to implement in any game. This does however lead to the programmer also having to be the composer and vice versa.

Procedural Imagination

PAS is made as a general framework/tool, but is also used for and tested on 'Procedural Imagination'. A procedurally generated game world that dynamically responds to the player's actions. Currently being developed by Josien Vos.

Check out the project on GitHub for more information